Farm

A Farm is a group of Citrix servers which provides published applications to all users that can be managed as a unit, enabling the administrator to configure features and settings for the entire farm rather than configuring each server individually. All the servers in the farm share a single data store.

A server farm is a grouping of servers running Citrix Presentation Server that can be manage as a unit, similar in principle to a network domain. When designing server farms, keep in mind the goal of providing users with the fastest possible application access while achieving the degree of centralized administration and network security that you need.

Data Store

This is the place where all the static information are stored. The data store provides a repository of persistent information about the farm (Farm configuration information, Published Application configurations, Server configurations, Static policy configuration, XenApp administrator accounts, and Printer configurations) that all servers can refer.

The data store is the central repository where almost the entire Citrix implementation is invested. The Administrators of the farm, the license server to point to, the whole farm configuration, the published applications, all their properties, the security of who gets access to what, the custom load evaluators, custom policies, configured printers and print drivers, all this is stored in the central repository called the data store.

Data Collector

Data Collector stores all the dynamic information like session, load and published applications in the servers in their zones and communicates the zone information to the Data Collectors in other zones in the farm Data collector is a Citrix Presentation Server whose IMA service takes on the additional role of tracking all of the dynamic information of other Presentation Servers. This information is stored in memory and called the “dynamic store”. The data store is a database on disk. The dynamic store is information stored in memory.

To look the contents of the in-memory dynamic store on the data collector, use “queryds” command. QueryDS can be found in the "support\debug" folder of your Presentation Server installation source files. To determine which server is acting as the data collector in the zone run "query farm /zone" from the command line.

LHC

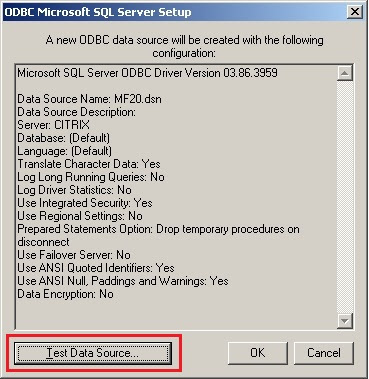

The IMA service running on each Presentation Server downloads the information it needs from the central data store into a local MDB database called the local host cache, or “LHC.” (The location of the local host cache is specified via a DSN referenced in the registry of the Presentation Server, at HKLM\SOFTWARE\Citrix\IMA\LHCDatasource\DataSourceName. By default this is a file called “Imalhc.dsn” and is stored in the same place as MF20.dsn.)

Each Presentation Server is smart enough to only download information from the data store that is relevant to it, meaning that the local host cache is unique for every server. Citrix created the local host cache for two reasons:

1. Permits a server to function in the absence of datastore connectivity.

2. Improves performance by caching information used by ICA Clients for enumeration and application resolution.

The LHC is an Access database (Imalhc.mdb) stored default in the path"<ProgramFiles>\Citrix\Independent Management Architecture" folder.

LHC contained the following information:

1. All servers in the farm, and their basic information.

2. All applications published within the farm and their properties.

3. All Windows network domain trust relationships within the farm.

4. All information specific to itself. (product code, SNMP settings, licensing information)

The LHC is critical in a CPS environment. In fact, it's the exclusive interface of the data store to the local server. The local server's IMA service only interacts with the LHC. It never contacts the central data store except when it's updating the LHC. If the server loses its connection to the central data store, there's no limit to how long it will continue to function. (In MetaFrame XP, this is limited to 48 or 96 hours, but that was because the data store also store license information.) But today, the server can run forever from the LHC and won't even skip a beat if the central connection is lost. In fact now you can even reboot the server when the central data store is down, and the IMA service will start from the LHC with out any problem. (Older versions of MetaFrame required a registry modification to start the IMA service from the LHC.

The LHC file is always in use when IMA is running, so it's not possible to delete it or anything. In theory it's possible that this file could become corrupted, and if this happens I guess all sorts of weird things could happen to your server. If you think this is the case in your environment, you can stop the IMA service and run the command "dsmaint recreatelhc" to recreate the local host cache file, although honestly I don't think this fixes anything very often. Local Host Cache is synchronised with the Data Store by the Zone Data Collector for every 30 minutes and it can also be configured through registry.

Restarting Servers at Scheduled Times

To optimize performance, you can restart a server automatically at specified intervals by creating a restart schedule.

Restart schedules are based on the local time for each server to which they apply. This means that if you apply a schedule to servers that are located in more than one time zone, the restarts do not happen simultaneously; each server is restarted at the selected time in its own time zone.

When the Citrix Independent Management Architecture service starts after a restart, it establishes a connection to the data store and updates the local host cache. This update can vary from a few hundred kilobytes of data to several megabytes of data, depending on the size and configuration of the server farm.

To reduce the load on the data store and to reduce the IMA service start time, Citrix recommends maintaining restart groups of no more than 100 servers. In large server farms with hundreds of servers, or when the database hardware is not sufficient, restart servers in groups of approximately 50, with at least 10 minute intervals between groups.